I was fortunate to present to the AWS Sydney North User Group on the topic “Build Better Containers for ECS with Habitat” (slides here). I was new to using Amazon’s Elastic Elastic Container Service (ECS) so I figured I’d document my findings as I went.

Habitat

If you’re unfamiliar with Habitat, go read through the introduction and try out the tutorial. It’s a new project with the ambitious goal of providing

Application automation that enables modern application teams to build, deploy, and run any application in any environment – from traditional data-centers to containerized microservices.

I’m not going to dive too far into Habitat, but it makes building portable applications and exporting them to Docker really easy. For the demonstration purposes, I reused the National Parks demo from this recent Chef blog post.

The National Park plan Bill used in his blog post was published to GitHub and the MongoDB plan was published to the Habitat depot, so I could build my own docker containers with them. Assuming you have Habitat already installed and your docker-machine running, you can build docker images for the Mongo database and National Parks Tomcat application (after checking it out from GitHub).

$ git clone git@github.com:billmeyer/national-parks-plan.git $ cd national-parks-plan $ hab studio enter

And once you’re in the studio

[1][default:/src:0]# build ... [2][default:/src:0]# hab pkg export docker mattray/national-parks ... [3][default:/src:0]# hab pkg export docker billmeyer/mongodb

After exiting the studio, your docker images are ready.

$ docker images REPOSITORY TAG IMAGE ID CREATED SIZE billmeyer/mongodb 3.2.6-20160824195527 dc1e785cb432 8 seconds ago 301 MB billmeyer/mongodb latest dc1e785cb432 8 seconds ago 301 MB mattray/national-parks 0.1.3-20161026234736 bdf5dc7b7465 32 seconds ago 708.5 MB mattray/national-parks latest bdf5dc7b7465 32 seconds ago 708.5 MB habitat-docker-registry.bintray.io/studio 0.11.0 7ebd429888ef 12 days ago 293.4 MB

EC2 Container Registry

Once we’ve got our containers built locally it’s time to move them to Amazon’s EC2 Container Registry, their private Docker Registry. I’m not going to go into the specifics of configuring your AWS developer setup, but you’ll need the aws and ecs-cli tools installed. First we’ll need to login to the ECR registry

$ aws ecr get-login

and take this output to login to our new ECR docker registry.

$ docker login ...

Habitat put the containers into namespaces of billmeyer/mongodb and mattray/national-parks, so we’ll need to create these within ECR.

$ aws ecr create-repository –repository-name billmeyer/mongodb ... $ aws ecr create-repository –repository-name mattray/redis ...

Once we have these we’ll tag and push our images to the ECR (note you’ll need your aws_account_id and availability zone).

$ docker tag billmeyer/mongodb:latest aws_account_id.dkr.ecr.ap-southeast-2.amazonaws.com/billmeyer/mongodb:latest $ docker tag mattray/national-parks:latest aws_account_id.dkr.ecr.ap-southeast-2.amazonaws.com/mattray/national-parks:latest $ docker push aws_account_id.dkr.ecr.ap-southeast-2.amazonaws.com/billmeyer/mongodb:latest The push refers to a repository [aws_account_id.dkr.ecr.ap-southeast-2.amazonaws.com/billmeyer/mongodb] 2922e8bbae38: Pushed latest: digest: sha256:105add47da75fb85ba605a0bdf58a4877705c80d656955b55792005267365a11 size: 5920 $ docker push aws_account_id.dkr.ecr.ap-southeast-2.amazonaws.com/mattray/national-parks:latest The push refers to a repository [aws_account_id.dkr.ecr.ap-southeast-2.amazonaws.com/mattray/national-parks] b3ddf26b58dc: Pushed latest: digest: sha256:9a74d0cddd5688e126d328527d63c5225d2ce320da67cadfc73fdf92f2fd1dcf size: 6676

EC2 Compute Service

Now that our docker images are pushed to ECR, let’s run them on Amazon’s ECS. First we’ll need to set up our ecs-cli tooling:

$ ecs-cli configure --cluster hab-demo

which creates a ~/.ecs/config file that may need your credentials. With that in place, we can provision EC2 instances to host our containers.

$ ecs-cli up --keypair mattray-apac --capability-iam --size 2 --instance-type t2.medium --port 22

This creates a Cloudformation stack with 2 t2.medium Amazon Linux’s ECS-tuned hosts with SSH access open. If you have an existing VPC, you could add the cluster to it and attach a security group opening up any additional ports you may need. For this demo I went into the AWS console and opened inbound port 8080.

The National Parks application is in a Docker compose np-demo.yml script:

version: '2'

services:

mongo:

image: aws_account_id.dkr.ecr.ap-southeast-2.amazonaws.com/billmeyer/mongodb:latest

hostname: "mongodb"

national-parks:

image: aws_account_id.dkr.ecr.ap-southeast-2.amazonaws.com/mattray/national-parks:latest

ports:

- "8080:8080"

links:

- mongo

command: --peer mongodb --bind database:mongodb.default

We have the mongo and national-parks services and use the docker images from the ECR. The docker compose documentation indicates that links should create /etc/hosts entries, but this does not appear to currently work with ECS so we assign the hostname: "mongodb" so we can have Habitat automatically peer to this node and connect the National Parks Tomcat application to Mongo. links does manage the deployment order of the containers, so it’s still worth using. We launch our ECS Task with

$ ecs-cli compose --file np-demo.yml -p np-demo up

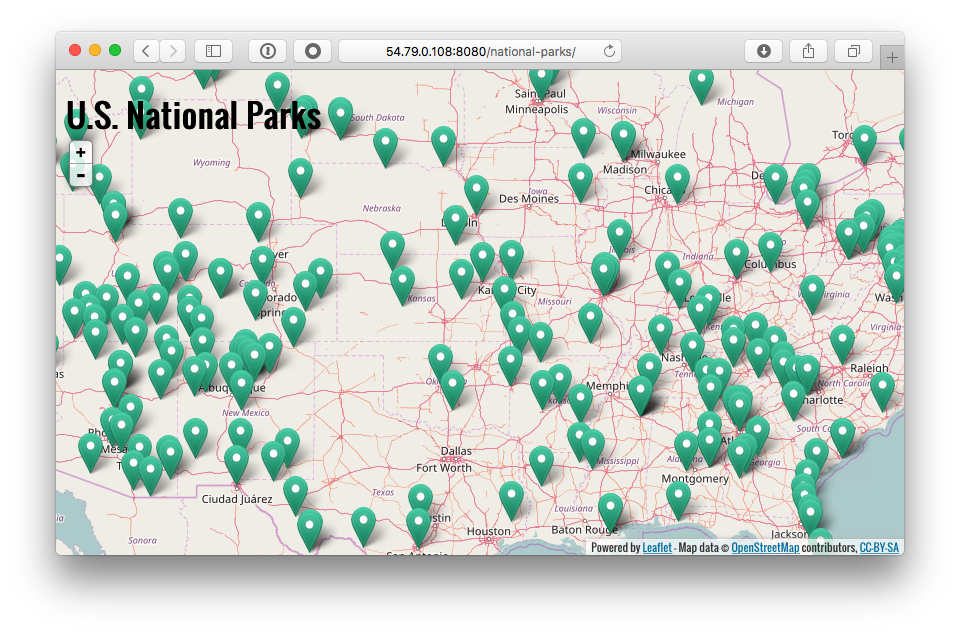

From the AWS console, find the public IP address of the ECS host in the cluster and connect to it at http://ecs-host-ip:8080/national-parks

You can also SSH to this host to run docker commands locally (ie. docker logs) for debugging purposes.

I wanted to get Chef running on my

I wanted to get Chef running on my